Human beings are known for many different things, but most importantly, they are known for getting better on a consistent basis. This tendency to improve, no matter the situation, has brought the world some huge …

Human beings are known for many different things, but most importantly, they are known for getting better on a consistent basis. This tendency to improve, no matter the situation, has brought the world some huge milestones, with technology emerging as quite a major member of the group. The reason why we hold technology in such a high regard is, by and large, predicated upon its skill-set, which guided us towards a reality that nobody could have ever imagined otherwise. Nevertheless, if we look beyond the surface for a second, it will become abundantly clear how the whole runner was also very much inspired from the way we applied those skills across a real world environment. The latter component, in fact, did a lot to give the creation a spectrum-wide presence, and as a result, initiate a full-blown tech revolution. Of course, this revolution eventually went on to scale up the human experience through some outright unique avenues, but even after achieving a feat so notable, technology will somehow continue to bring forth the right goods. The same has turned more and more evident in recent times, and assuming one new discovery ends up with the desired impact, it will only put that trend on a higher pedestal moving forward.

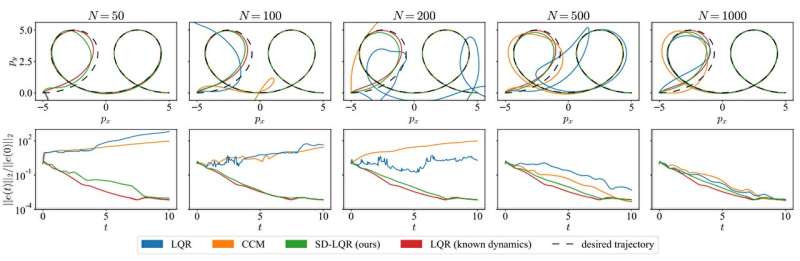

The researching teams at Massachusetts Institute of Technology and Stanford University have successfully developed a new machine-learning approach, which can be used to control robotic systems in environments where conditions tend to change quickly. According to certain reports, the development extracts specific structure from control theory for basically applying it within a standard procedure of learning a model. Notably enough, it does so in a way that springs up an effective method of controlling complex dynamics. Now, once the stated structure is applied across a learned model, the new technique uses its technical brilliance to birth an effective controller right from the model itself. This is a major differentiator, considering almost all other machine-learning methods require a controller to be derived or learned separately through some extra steps. Not just that, the technique in question is also able to deliver on the promised value proposition using significantly lesser data than other approaches. A direct benefit of the same is that it ensures the controller can put out an upto-the-mark performance even in those challenging areas where data inputs may not be available in spades.

“This work tries to strike a balance between identifying structure in your system and just learning a model from data,” said Spencer M. Richards, a graduate student at Stanford University and lead author of the study. “Our approach is inspired by how roboticists use physics to derive simpler models for robots. Physical analysis of these models often yields a useful structure for the purposes of control—one that you might miss if you just tried to naively fit a model to data. Instead, we try to identify similarly useful structure from data that indicates how to implement your control logic.”

The researchers have already conducted initial tests on their technology, and going by the available details, the controller extracted from their learned model nearly matched the performance of a ground-truth controller, which is built using the exact dynamics of the system. Apart from that, they also validated the model’s ability to remain data efficient. To give you a practical example, the simulations revealed that their technique could effectively model a highly dynamic rotor-driven vehicle using only 100 data points.

For the future, the teams are planning to develop models that aren’t just more physically interpretable, but also better positioned to identify very specific information about a dynamical system.

“What I found particularly exciting and compelling was the integration of these components into a joint learning algorithm, such that control-oriented structure acts as an inductive bias in the learning process. The result is a data-efficient learning process that outputs dynamic models that enjoy intrinsic structure that enables effective, stable, and robust control. While the technical contributions of the paper are excellent themselves, it is this conceptual contribution that I view as most exciting and significant,” said Nikolai Matni, an assistant professor in the Department of Electrical and Systems Engineering at the University of Pennsylvania.